On 23rd July, Ian Hickson, the HTML5 editor, posted an update to the WHATWG mailing list introducing the first draft of a platform for accessibility for the HTML5 <video> element. The platform provides for captions, subtitles, audio descriptions, chapter markers and similar time-synchronized text both in-band (inside the video resource) and out-of-band (as external text files). Right now, the proposal only regards <video>, but I personally believe the same can be applied to the <audio> element, except we have to be a bit more flexible with the rendering approach. Anyway…

What I want to do here is to summarize what was introduced, together with the improvements that I and some others have proposed in follow-up emails, and list some of the media accessibility needs that we are not yet dealing with.

For those wanting to only selectively read some sections, here is a clickable table of contents of this rather long blog post:

- THE WebSRT TIMED TEXT FORMAT

- ASSOCIATING EXTERNAL TIMED TEXT RESOURCES WITH A VIDEO

- EXPOSING A LIST OF TimedTracks TO JAVASCRIPT

- RENDERING TimedTracks

- SUMMARY AND FURTHER NEEDS

THE WebSRT TIMED TEXT FORMAT

The first and to everyone probably most surprising part is the new file format that is being proposed to contain out-of-band time-synchronized text for video. A new format was necessary after the analysis of all relevant existing formats determined that they were either insufficient or hard to use in a Web environment.

The new format is called WebSRT and is an extension to the existing SRT SubRip format. It is actually also the part of the new specification that I am personally most uncomfortable with. Not that WebSRT is a bad format. It’s just not sufficient yet to provide all the functionality that a good time-synchronized text format for Web media should. Let’s look at some examples.

WebSRT is composed of a sequence of timed text cues (that’s what we’ve decided to call the pieces of text that are active during a certain time interval). Because of its ancestry of SRT, the text cues can optionally be numbered through. The content of the text cues is currently allowed to contain three different types of text: plain text, minimal markup, and anything at all (also called “metadata”).

In its most simple form, a WebSRT file is just an ordinary old SRT file with optional cue numbers and only plain text in cues:

1 00:00:15.00 --> 00:00:17.95 At the left we can see... 2 00:00:18.16 --> 00:00:20.08 At the right we can see the... 3 00:00:20.11 --> 00:00:21.96 ...the head-snarlers

A bit of a more complex example results if we introduce minimal markup:

00:00:15.00 --> 00:00:17.95 A:start Auf der <i>linken</i> Seite sehen wir... 00:00:18.16 --> 00:00:20.08 A:end Auf der <b>rechten</b> Seite sehen wir die.... 00:00:20.11 --> 00:00:21.96 A:end <1>...die Enthaupter. 00:00:21.99 --> 00:00:24.36 A:start <2>Alles ist sicher. Vollkommen <b>sicher</b>.

and add to this a CSS to provide for some colors and special formatting:

::cue { background: rgba(0,0,0,0.5); }

::cue-part(1) { color: red; }

::cue-part(2, b) { font-style: normal; text-decoration: underline; }

Minimal markup accepts <i>, <b>, <ruby> and a timestamp in <>, providing for italics, bold, and ruby markup as well as karaoke timestamps. Any further styling can be done using the CSS pseudo-elements ::cue and ::cue-part, which accept the features ‘color’, ‘text-shadow’, ‘text-outline’, ‘background’, ‘outline’, and ‘font’.

Note that positioning requires some special notes at the end of the start/end timestamps which can provide for vertical text, line position, text position, size and alignment cue setting. Here is an example with vertically rendered Chinese text, right-aligned at 98% of the video frame:

00:00:15.00 --> 00:00:17.95 A:start D:vertical L:98% 在左边我们可以看到... 00:00:18.16 --> 00:00:20.08 A:start D:vertical L:98% 在右边我们可以看到... 00:00:20.11 --> 00:00:21.96 A:start D:vertical L:98% ...捕蝇草械. 00:00:21.99 --> 00:00:24.36 A:start D:vertical L:98% 一切都安全. 非常地安全.

Finally, WebSRT files can be authored with abstract metadata inside cues, which practically means anything at all. Here’s an example with HTML content:

00:00:15.00 --> 00:00:17.95 A:start <img src="pic1.png"/>Auf der <i>linken</i> Seite sehen wir... 00:00:18.16 --> 00:00:20.08 A:end <img src="pic2.png"/>Auf der <b>rechten</b> Seite sehen wir die.... 00:00:20.11 --> 00:00:21.96 A:end <img src="pic3.png"/>...die <a href="http://members.chello.nl/j.kassenaar/ elephantsdream/subtitles.html">Enthaupter</a>. 00:00:21.99 --> 00:00:24.36 A:start <img src="pic4.png"/>Alles ist <mark>sicher</mark>.<br/>Vollkommen <b>sicher</b>.

Here is another example with JSON in the cues:

00:00:00.00 --> 00:00:44.00

{

slide: intro.png,

title: "Really Achieving Your Childhood Dreams" by Randy Pausch,

Carnegie Mellon University, Sept 18, 2007

}

00:00:44.00 --> 00:01:18.00

{

slide: elephant.png,

title: The elephant in the room...

}

00:01:18.00 --> 00:02:05.00

{

slide: denial.png,

title: I'm not in denial...

}

What I like about WebSRT:

- it allows for all sorts of different content in the text cues – plain text is useful for texted audio descriptions, minimal markup is useful for subtitles, captions, karaoke and chapters, and “metadata” is useful for, well, any data.

- it can be easily encapsulated into media resources and thus turned into in-band tracks by regarding each cue as a data packet with time stamps.

- it is not verbose

Where I think WebSRT still needs improvements:

- break with the SRT history: since WebSRT and SRT files are so different, WebSRT should get its own MIME type, e.g. text/websrt, and file extensions, e.g. .wsrt; this will free WebSRT for changes that wouldn’t be possible by trying to keep conformant with SRT

- introduce some header fields into WebSRT: the format needs

- file-wide name-value metadata, such as author, date, copyright, etc

- language specification for the file as a hint for font selection and speech synthesis

- a possibility for style sheet association in the file header

- a means to identify which parser is required for the cues

- a magic identifier and a version string of the format

- allow innerHTML as an additional format in the cues with the CSS pseudo-elements applying to all HTML elements

- allow full use of CSS instead of just the restricted features and also use it for positioning instead of the hard to understand positioning hints

- on the minimum markup, provide a neutral structuring element such as <span @id @class @lang> to associate specific styles or specific languages with a subpart of the cue

Note that I undertook some experiments with an alternative format that is XML-based and called WMML to gain most of these insights and determine the advantages/disadvantages of a xml-based format. The foremost advantage is that there is no automatism with newlines and displayed new lines, which can make the source text file more readable. The foremost disadvantages are verbosity and that there needs to be a simple encoding step to remove all encapsulating header-type content from around the timed text cues before encoding it into a binary media resource.

ASSOCIATING EXTERNAL TIMED TEXT RESOURCES WITH A VIDEO

Now that we have a timed text format, we need to be able to associate it with a media resource in HTML5. This is what the <track> element was introduced for. It associates the timestamps in the timed text cues with the timeline of the video resource. The browser is then expected to render these during the time interval in which the cues are expected to be active.

Here is an example for how to associate multiple subtitle tracks with a video:

<video src="california.webm" controls>

<track label="English" kind="subtitles" src="calif_eng.wsrt" srclang="en">

<track label="German" kind="subtitles" src="calif_de.wsrt" srclang="de">

<track label="Chinese" kind="subtitles" src="calif_zh.wsrt" srclang="zh">

</video>

In this case, the UA is expected to provide a text menu with a subtitle entry with these three tracks and their label as part of the video controls. Thus, the user can interactively activate one of the tracks.

Here is an example for multiple tracks of different kinds:

<video src="california.webm" controls>

<track label="English" kind="subtitles" src="calif_eng.wsrt" srclang="en">

<track label="German" kind="captions" src="calif_de.wsrt" srclang="de">

<track label="French" kind="chapter" src="calif_fr.wsrt" srclang="fr">

<track label="English" kind="metadata" src="calif_meta.wsrt" srclang="en">

<track label="Chinese" kind="descriptions" src="calif_zh.wsrt" srclang="zh">

</video>

In this case, the UA is expected to provide a text menu with a list of track kinds with one entry each for subtitles, captions and descriptions through the controls. The chapter tracks are expected to provide some sort of visual subdivision on the timeline and the metadata tracks are not exposed visually, but are only available through the JavaScript API.

Here are several ideas for improving the <track> specification:

- <track> is currently only defined for WebSRT resources – it should be made generic and then browsers can compete on the formats for which they provide support. WebSRT could be the baseline format. A @type attribute could be added to hint at the MIME type of the provided resource.

- <track> needs a means for authors to mark certain tracks as active, others as inactive. This can be overruled by browser settings e.g. on @srclang and by user interaction.

- karaoke and lyrics are supported by WebSRT, but aren’t in the HTML5 spec as track kinds – they should be added and made visible like subtitles or captions.

EXPOSING A LIST OF TimedTracks TO JAVASCRIPT

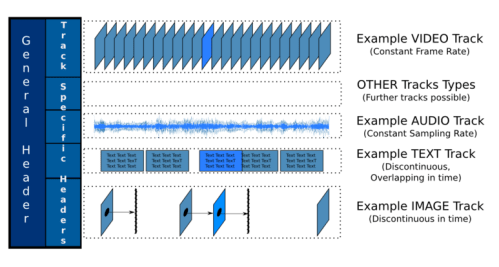

This is where we take an extra step and move to a uniform handling of both in-band and out-of-band timed text tracks. Futher, a third type of timed text track has been introduced in the form of a MutableTimedTrack – i.e. one that can be authored and added through JavaScript alone.

The JavaScript API that is exposed for any of these track type is identical. A media element now has this additional IDL interface:

interface HTMLMediaElement : HTMLElement {

...

readonly attribute TimedTrack[] tracks;

MutableTimedTrack addTrack(in DOMString label, in DOMString kind,

in DOMString language);

};

A media element thus manages a list of TimedTracks and provides for adding TimedTracks through addTrack().

The timed tracks are associated with a media resource in the following order:

- The <track> element children of the media element, in tree order.

- Tracks created through the addTrack() method, in the order they were added, oldest first.

- In-band timed text tracks, in the order defined by the media resource’s format specification.

The IDL interface of a TimedTrack is as follows:

interface TimedTrack {

readonly attribute DOMString kind;

readonly attribute DOMString label;

readonly attribute DOMString language;

readonly attribute unsigned short readyState;

attribute unsigned short mode;

readonly attribute TimedTrackCueList cues;

readonly attribute TimedTrackCueList activeCues;

readonly attribute Function onload;

readonly attribute Function onerror;

readonly attribute Function oncuechange;

};

The first three capture the value of the @kind, @label and @srclang attributes and are provided by the addTrack() function for MutableTimedTracks and exposed from metadata in the binary resource for in-band tracks.

The readyState captures whether the data is available and is one of “not loaded”, “loading”, “loaded”, “failed to load”. Data is only availalbe in “loaded” state.

The mode attribute captures whether the data is activate to be displayed and is one of “disabled”, “hidden” and “showing”. In the “disabled” mode, the UA doesn’t have to download the resource, allowing for some bandwidth management.

The cues and activeCues attributes provide the list of parsed cues for the given track and the subpart thereof that is currently active.

The onload, onerror, and oncuechange functions are event handlers for the load, error and cuechange events of the TimedTrack.

Individual cues expose the following IDL interface:

interface TimedTrackCue {

readonly attribute TimedTrack track;

readonly attribute DOMString id;

readonly attribute float startTime;

readonly attribute float endTime;

DOMString getCueAsSource();

DocumentFragment getCueAsHTML();

readonly attribute boolean pauseOnExit;

readonly attribute Function onenter;

readonly attribute Function onexit;

readonly attribute DOMString direction;

readonly attribute boolean snapToLines;

readonly attribute long linePosition;

readonly attribute long textPosition;

readonly attribute long size;

readonly attribute DOMString alignment;

readonly attribute DOMString voice;

};

The @track attribute links the cue to its TimedTrack.

The @id, @startTime, @endTime attributes expose a cue identifier and its associated time interval. The getCueAsSource() and getCueAsHTML() functions provide either an unparsed cue text content or a text content parsed into a HTML DOM subtree.

The @pauseOnExit attribute can be set to true/false and indicates whether at the end of the cue’s time interval the media playback should be paused and wait for user interaction to continue. This is particularly important as we are trying to support extended audio descriptions and extended captions.

The onenter and onexit functions are event handlers for the enter and exit events of the TimedTrackCue.

The @direction, @snapToLines, @linePosition, @textPosition, @size, @alignment and @voice attributes expose WebSRT positioning and semantic markup of the cue.

My only concerns with this part of the specification are:

- The WebSRT-related attributes in the TimedTrackCue are in conflict with CSS attributes and really should not be introduced into HTML5, since they are WebSRT-specific. They will not exist in other types of in-band or out-of-band timed text tracks. As there is a mapping to do already, why not rely on already available CSS features.

- There is no API to expose header-specific metadata from timed text tracks into JavaScript. This such as the copyright holder, the creation date and the usage rights of a timed text track would be useful to have available. I would propose to add a list of name-value metadata elements to the TimedTrack API.

- In addition, I would propose to allow media fragment hyperlinks in a <video> @src attribute to point to the @id of a TimedTextCue, thus defining that the playback position should be moved to the time offset of that TimedTextCue. This is a useful feature and builds on bringing named media fragment URIs and TimedTracks together.

RENDERING TimedTracks

The third part of the timed track framework deals with how to render the timed text cues in a Web page. The rendering rules are explained in the HTML5 rendering section.

I’ve extracted the following rough steps from the rendering algorithm:

- All timed tracks of a media resource that are in “showing” mode are rendered together to avoid overlapping text from multiple tracks.

- The timed tracks cues that are to be rendered are collected from the active timed tracks and ordered by the timed track order first and by their start time second. Where there are identical start times, the cues are ordered by their end time, earliest first, or by their creation order if all else is identical.

- Each cue gets its own CSS box.

- The text in the CSS boxes is positioned and formated by interpreting the positioning and formatting instructions of WebSRT that are provided on the cues.

- An anonymous inline CSS box is created into which all the cue CSS boxes are wrapped.

- The wrapping CSS box gets the dimensions of the video viewport. The cue CSS boxes are positioned so they don’t overlap. The text inside the cue CSS boxes inside the wrapping CSS box is wrapped at the edges if necessary.

To overcome security concerns with this kind of direct rendering of a CSS box into the Web page where text comes potentially from a different and malicious Web site, it is required to have the cues come from the same origin as the Web page.

To allow application of a restricted set of CSS properties to the timed text cues, a set of pseudo-selectors was introduced. This is necessary since all the CSS boxes are anonymous and cannot be addressed from the Web page. The introduced pseudo-selectors are ::cue to address a complete cue CSS box, and ::cue-part to address a subpart of a cue CSS box based on a set of identifiers provided by WebSRT.

I have several issues with this approach:

- I believe that it is not a good idea to only restrict rendering to same-origin files. This will disallow the use of external captioning services (or even just a separate caption server of the same company) to link to for providing the captions to a video. Henri Sivonen proposed a means to overcome this by parsing every cue basically as its own HTML document (well, the body of a document) and then rendering these in iFrame-manner into the Web page. This would overcome the same-origin restriction. It would also allow to do away with the new ::cue CSS selectors, thus simplifying the solution.

- In general I am concerned about how tightly the rendering is tied to WebSRT. Step 4 should not be in the HTML5 specification, but only apply to WebSRT. Every external format should provide its own mapping to CSS. As it is specified right now, other formats, such as e.g. 3GPP in MPEG-4 or Kate in Ogg, are required to map their format and positioning information to WebSRT instructions. These are then converted again using the WebSRT to CSS mapping rules. That seems overkill.

- I also find step 6 very limiting, since only the video viewport is regarded as a potential rendering area – this is also the reason why there is no rendering for audio elements. Instead, it would make a lot more sense if a CSS box was provided by the HTML page – the default being the video viewport, but it could be changed to any area on screen. This would allow to render music lyrics under or above an audio element, or render captions below a video element to avoid any overlap at all.

SUMMARY AND FURTHER NEEDS

We’ve made huge progress on accessibility features for HTML5 media elements with the specifications that Ian proposed. I think we can move it to a flexible and feature-rich framework as the improvements that Henri, myself and others have proposed are included.

This will meet most of the requirements that the W3C HTML Accessibility Task Force has collected for media elements where the requirements relate to accessibility functionality provided through alternative text resources.

However, we are not solving any of the accessibility needs that relate to alternative audio-visual tracks and resources. In particular there is no solution yet to deal with multi-track audio or video files that have e.g. sign language or audio description tracks in them – not to speak of the issues that can be introduced through dealing with separate media resources from several sites that need to be played back in sync. This latter may be a challenge for future versions of HTML5, since needs for such synchoronisation of multiple resources have to be explored further.

In a first instance, we will require an API to expose in-band tracks, a means to control their activation interactively in a UI, and a description of how they should be rendered. E.g. should a sign language track be rendered as pciture-in-picture? Clear audio and Sign translation are the two key accessibility needs that can be satisfied with such a multi-track solution.

Finally, another key requirement area for media accessibility is described in a section called “Content Navigation by Content Structure”. This describes the need for vision-impaired users to be able to navigate through a media resource based on semantic markup – think of it as similar to a navigation through a book by book chapters and paragraphs. The introduction of chapter markers goes some way towards satisfying this need, but chapter markers tend to address only big time intervals in a video and don’t let you navigate on a different level to subchapters and paragraphs. It is possible to provide that navigation through providing several chapter tracks at different resolution levels, but then they are not linked together and navigation cannot easily swap between resolution levels.

An alternative might be to include different resolution levels inside a single chapter track and somehow control the UI to manage them as different resolutions. This would only require an additional attribute on text cues and could be useful to other types of text tracks, too. For example, captions could be navigated based on scenes, shots, coversations, or individual captions. Some experimentation will be required here before we can introduce a sensible extension to the given media accessibility framework.